Algorithms significantly influence our online interactions and choices in the modern world. Contrary to popular belief, they are not impartial tools—this misconception often hides their underlying biases. This blog examines how biases exist in algorithms, their real-world effects on people, and why clearer oversight and responsibility in creating these systems are critically needed.

Understanding Algorithmic Bias

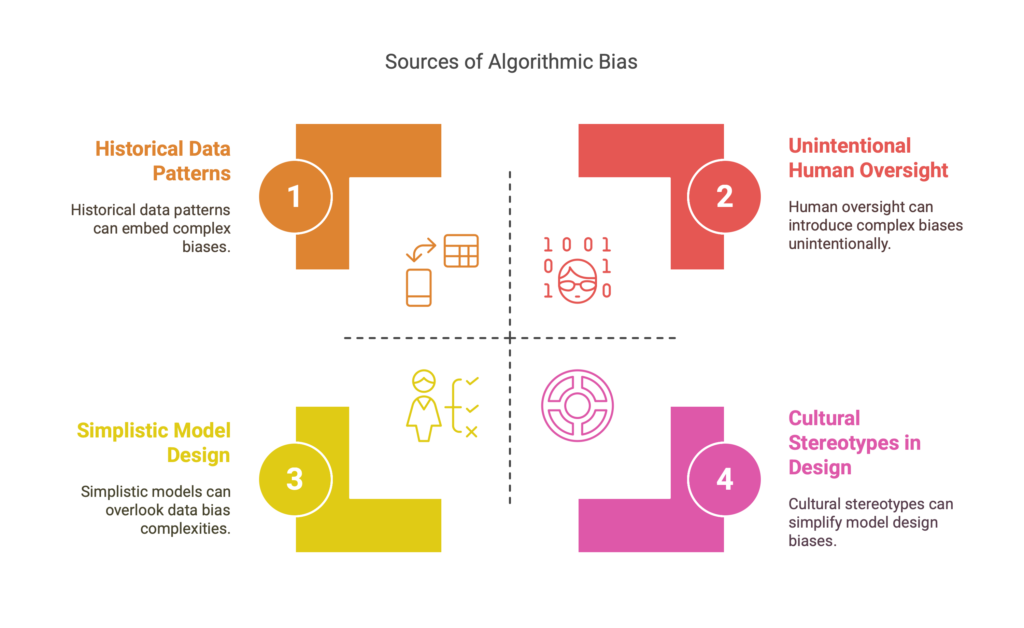

Algorithmic bias happens when an algorithm creates unfair outcomes because of flaws in its design or data. These biases often arise from three main sources:

- Data Bias: Algorithms rely on past data, which might include unfair patterns. For example, a hiring tool trained on data from a company that previously excluded certain groups could repeat those unfair practices.

- Model Bias: The way an algorithm is built can lead to unfair results. Some models might focus on certain factors or goals, ignoring the varied needs of different people.

- Human Bias: The people creating algorithms can unintentionally add their own biases. Choices like what data to use, how to define success, or which problems to solve can reflect personal beliefs or cultural stereotypes.

As algorithms are used in important fields like jobs, policing, and healthcare, addressing these biases is crucial to ensure fairness and accuracy.

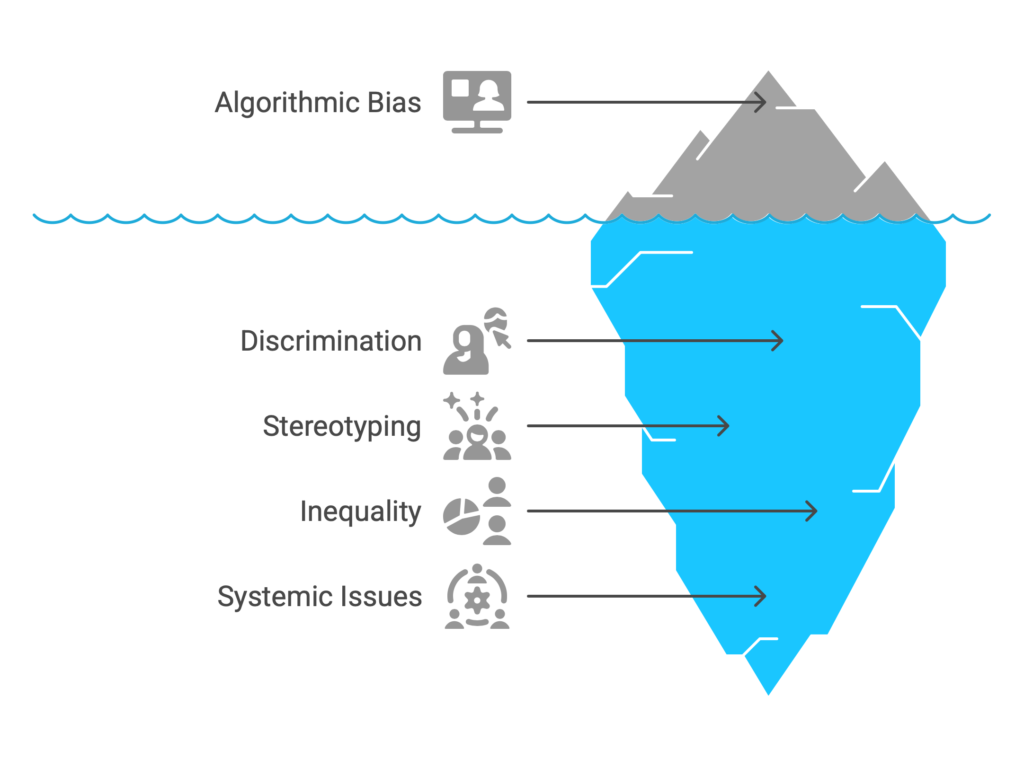

Implications of Algorithmic Bias

Effects of Algorithmic Bias

Biased algorithms can have serious and widespread impacts. They may strengthen harmful stereotypes, maintain unfair systems, and cause discrimination against people based on factors like race, gender, or income level. For instance:

- In criminal justice, biased algorithms might unfairly focus on marginalized groups, worsening inequality.

- In hiring, flawed algorithms could reject qualified candidates from minority backgrounds, limiting their chances.

Real-World Examples

- Facial Recognition Systems: Research reveals that many facial recognition tools struggle to correctly identify people of color or women, increasing risks like false arrests or privacy breaches.

- Crime-Prediction Tools: Algorithms used to predict crime often focus more on areas with higher reported crime, which are frequently communities of color. This creates a harmful loop of over-policing and distrust.

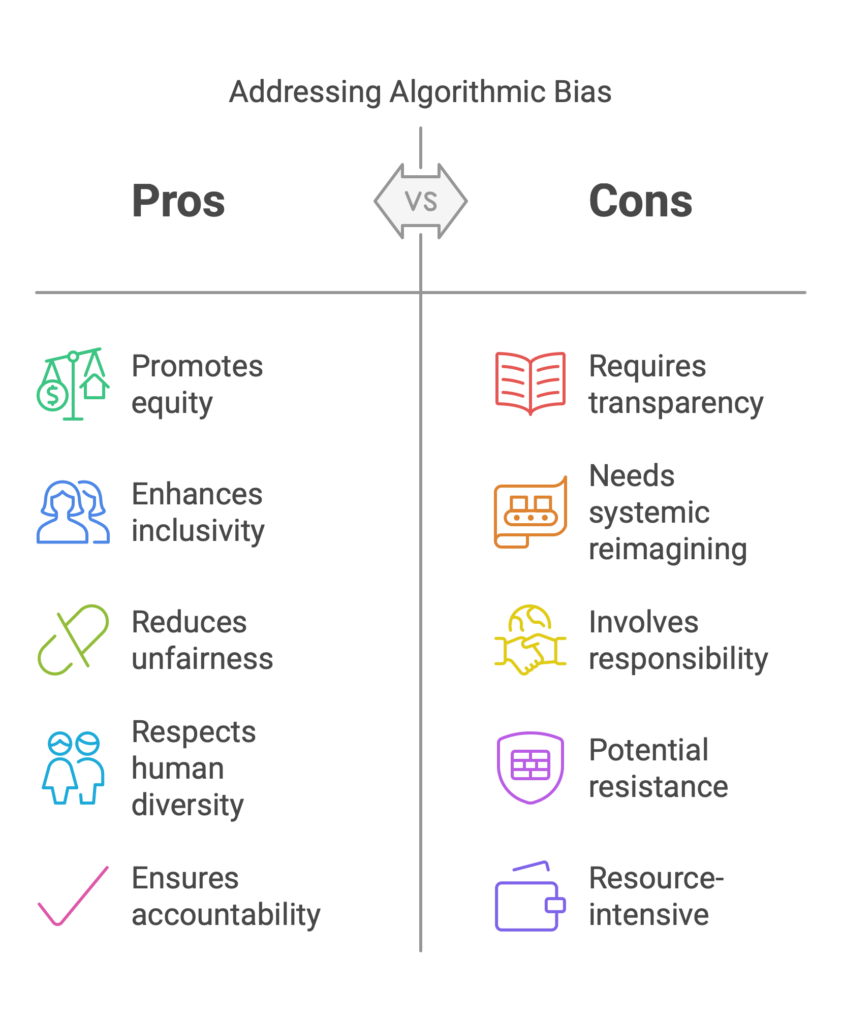

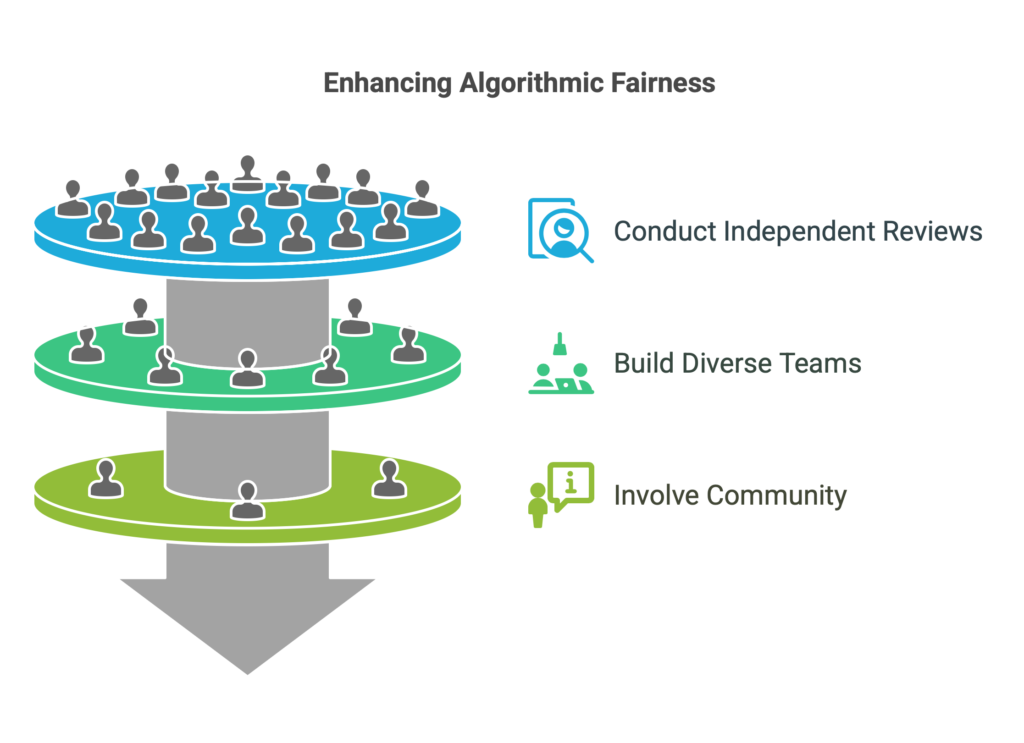

Addressing Algorithmic Bias Through Transparency and Accountability

Fighting algorithmic bias requires prioritizing openness in how algorithms work. Developers, organizations, and governments must collaborate to create standards that ensure fairness and responsibility. Key steps include:

- Independent Reviews: Regular checks by third parties can spot hidden biases in these systems, helping ensure fairness for all users.

- Building Diverse Teams: Teams with varied backgrounds (e.g., race, gender, or expertise) are more likely to design solutions that work better for everyone.

- Community Involvement: Including everyday people in conversations about how algorithms are built and used helps raise awareness and push for responsible technology.

Closing Summary

The belief that algorithms are neutral is misleading and can deepen existing societal unfairness. To ensure technology benefits everyone equally, we must openly address how biases enter algorithms and enforce responsibility in their use. Progress requires reimagining systems to prioritize inclusivity, ensuring algorithms respect the varied realities of human life.